I have been struggling with an issue reprotecting a Protection Group within VMware Site Recovery Manager with the IBM N series (NetApp) Storage Replication Adapter.

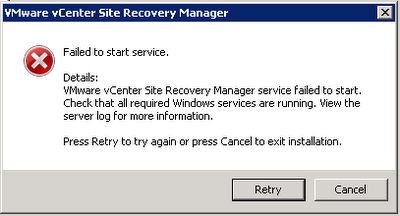

The first step Configure Storage to Reverse Direction would fail with “Error – Failed to reverse replication for failed over devices. SRA command ‘prepareReverseReplication’ failed. Peer array ID provided in the SRM input is incorrect Check the SRM logs to verify correct peer array ID.“

The setup was as follows

Site-A Site-B

Array Manager – NSERIES01 Array Manager – DRNSERIES01

Local device – /vol/NFS_VMware_Test Local Device – /vol/NFS_VMware_Test

NSERIES01:NFS_VMware_Test was SnapMirrored to DRNSERIES01:NFS_VMware_Test

The volume had a single virtual machine in it with a single vmdk and was running at Site-A.

I had a protection group for this replication pair with the single virtual machine in it.

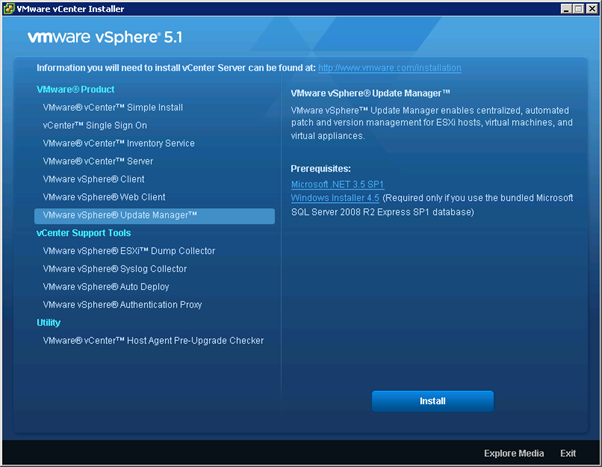

I could successfully recover the protection group at Site-B and reprotect it so that the SnapMirror was now from DRNSERIES01:NFS_VMware_Test to NSERIES01:NFS_VMware_Test and the Recovery Plan was created at Sie-A. I could then recover the protect group back to Site-A (i.e. the new fail-back functionality in SRM 5). It was when I tried to reprotect the protection group again so that it was ready for a subsequent recovery to Site-B that I had this issue.

I also had the issue if I started with a Protect Group at Site-B, recovered it at Site-A, I could not perform a reprotect back to Site-B.

Checking the SRM logs showed the following

validating if path NFS_VMware_Test is valid mirrored device for given peerArrayId

curr state = invalid, prev state = ,source name:DRNSERIES01:NFS_VMware_Test, destination:NSERIES01:NFS_VMware_Test, path=NFS_VMware_Test, arrayID:NSERIES01

Skipping /vol/NFS_VMware_Test as peerArrayId DRNSERIES01 is not valid

I placed a support call with VMware who pointed out that the error message was coming from the SRA and therefore I would need to place a call with IBM. IBM were struggling to find a reason for this error and after IBM Level 2 support could not find the reason the passed it to NetApp to investigate. The first response from NetApp was to wait for the resync to complete before doing the Reprotect, however it is the Reprotect that does the resync so that response was obviously rubbish.

While I was waiting for IBM to come back to me I posted the issue on both the NetApp and VMware Forums and reached out to the two best guys that I know at VMware for SRM, Mike Laverick and Lee Dilworth. Both had good suggestions about making sure everything was setup exactly as detailed in the documentation, but as it was I still did not have a solution. Links to the Forum posts NetApp and VMware.

So I decided to dig into the perl scripts that the SRA uses to find the problem myself. After a lot of investigation and debugging the perl scripts used by the NetApp (IBM N series SRA) I discovered the following:

The issue was that the filer name at the Recovery Site had the same name as the filer at the Protected Site with a prefix on it, i.e. in my case the Recovery Site filer was named NSERIES01 and the Protected Site was DRNSERIES01. Remember I had already performed a fail-over and fail-back so the Protected Site was my original Recovery Site, so yes the filer on the Protected Site for this Protection Group is DRNSERIES01 and the Recovery Site has NSERIES01 on it.

When the Reprotect task is run the first step is to call the SRA with the command prepareReverseReplication, this calls reverseReplication.pl which attempts to check that the SnapMirror is broken off. It gets the status of all of the SnapMirrors from the filer at the Recovery Site, i.e. in this case NSERIES01. It then goes through each of these looking for a match of the local-filer-name:volume-name in the source of the snapmirror, e.g. for my test group it was attempting to match NSERIES01:NFS_VMware_Test, at this point the source of the SnapMirror is DRNSERIES01:NFS_VMware_Test which is correct but because the script is using a pattern matching test it matches NSERIES01:NFS_VMware_Test to DRNSERIES01:NFS_VMware_Test as NSERIES01:NFS_VMware_Test is contained within DRNSERIES01:NFS_VMware_Test. It then checks if the destination of the snapmirror matches the peerArrayID (i.e. in this case DRNSERIES01) which it does not as the destination, correctly, is NSERIES01 and then reports that the peerArrayID is incorrect. If there is no match on the local-filer-name:volume-name in the source of the snapmirror then it goes on to check the destination of the snapmirrors and when it finds a match it check if the peerArrayID matches the source of the SnapMirror and if it does it then checks that the status of the SnapMirror is broken-off.

I never hit the issue with the first reprotect because DRNSERIES01:NFS_VMware_Test is not contained within the source of the SnapMirror (NSERIES01:NFS_VMware_Test) and therefore it goes on to the next test of checking for DRNSERIES01:NFS_VMware_Test in the destination of the SnapMirror, which it finds and then checks DRNSERIES01 against the destination that also matches and finally confirms that the SnapMirror relationship is broken-off.

I had changed the volume on DRNSERIES01 a while ago because I thought the issue may have been due to the volume names being the same but I had changed it by putting a suffix of _Repl on the end and therefore the script was still matching NSERIES01:NFS_VMware_Test to DRNSERIES01:NFS_VMware_Test_Repl.

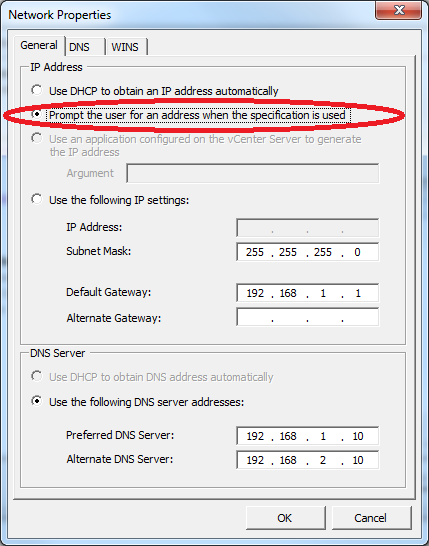

I have now configured my test group as follows:

Protected Site

Filer Name = NSERIES01

Volume Name = NFS_VMware_Test_HMR

Recovery Site

File Name = DRNSERIES01

Volume Name = NFS_VMware_Test_EWC

I can now recover to the Recovery Site, Reprotect, Fail-Back, Reprotect and Fail-over again and continue performing recoveries and reprotect over and over again as often as you can re-record on a Scotch VHS tape!

If the filer on Site-B had a suffix on its name instead of a prefix, e.g. it was named NSERIES01DR, or had a completely different name then I would never have hit this bug in the SRA. I will be waiting for NetApp to fix the SRA. In the meantime I will be renaming all of my volumes at the recovery site so that to avoid this issue.

UPDATE FROM VAUGHN STEWART: This is a known issue and will be fixed in a SRA 2.1.x release currently undergoing VMware certification.